Blueprint Information Extraction: Computer Vision + LLMs vs Vision-Language Models

Integrated AI Architectures for Superhuman Accuracy

Member of Technical Staff @ Brain Co

Member of Technical Staff @ Brain Co

Integrated AI Architectures for Superhuman Accuracy

Member of Technical Staff @ Brain Co

Member of Technical Staff @ Brain Co

Leveraging Both Specialized CV Models and Foundation VLMs for Blueprint Analysis

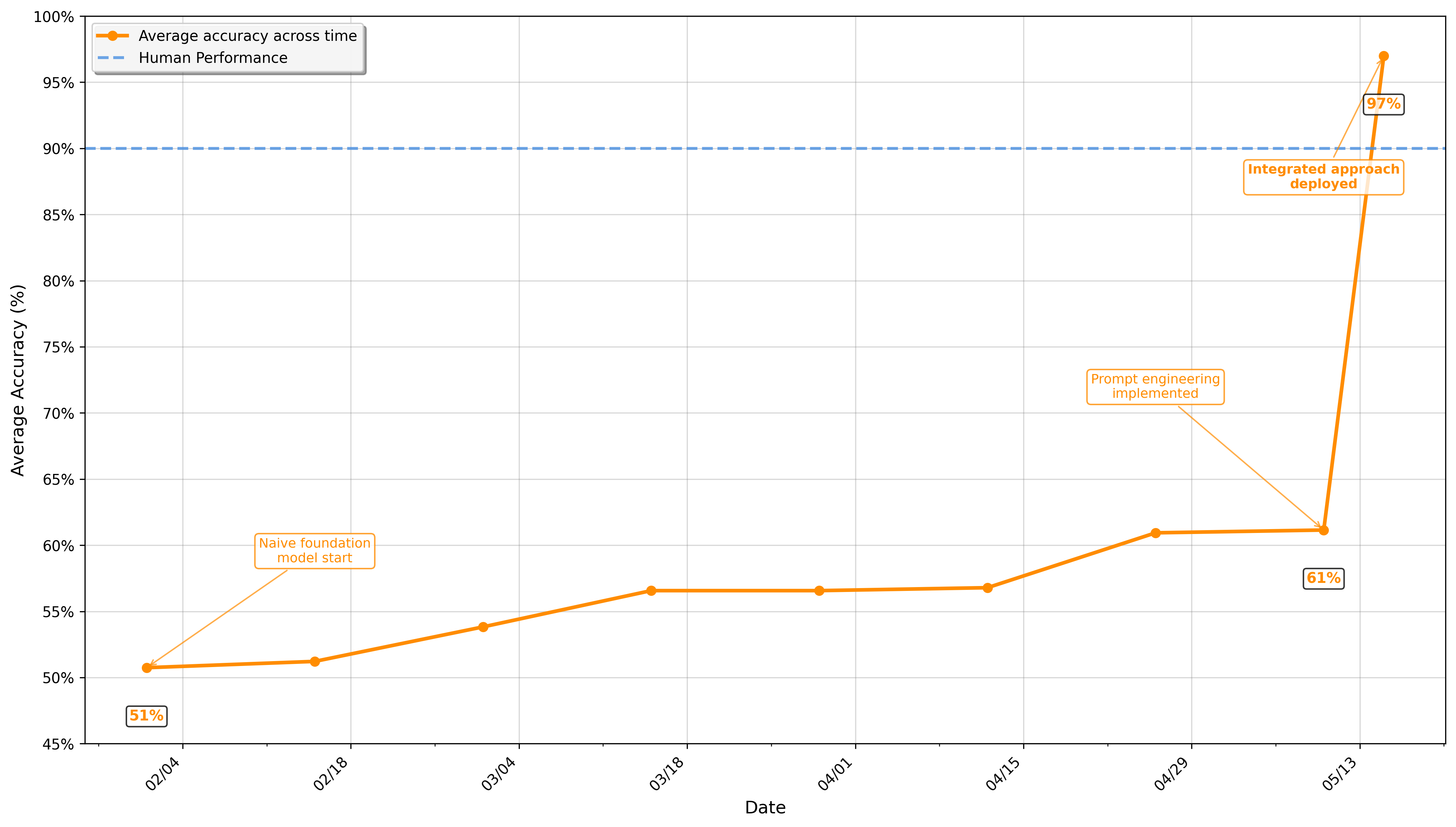

We developed a production, state-of-the-art blueprint analysis system that leverages both small, task-specific computer vision models and large multi-modal foundation models in conjunction. Specialized CV models excel at precise element detection and measurement extraction, but struggle with extracting and reasoning about text. Conversely, while large foundation models excel at extracting and reasoning about text written on drawings and are able to perform less-constrained tasks, they struggle with precise element detection and often hallucinate when presented with too much visual information. Our integrated approach achieves 96.8% overall accuracy across all information we extract from the plans by combining precise element extraction from small, task-specific models with the textual understanding and reasoning ability of large foundation models.

Architectural blueprints demand both microscopic precision and macroscopic understanding. An engineer analyzing a commercial building floor plan needs exact measurements (door width: 36", ceiling height: 9'6") while simultaneously understanding spatial relationships (restroom accessibility compliance) and design intent (fire egress routing). No single AI approach handles this full spectrum optimally.

Our production system processes thousands architectural applications by leveraging specialized computer vision models for precise detection alongside foundation Vision-Language Models for contextual validation. This isn't about choosing one approach over another, it's about orchestrating integrated AI capabilities to achieve professional-grade analysis accuracy.

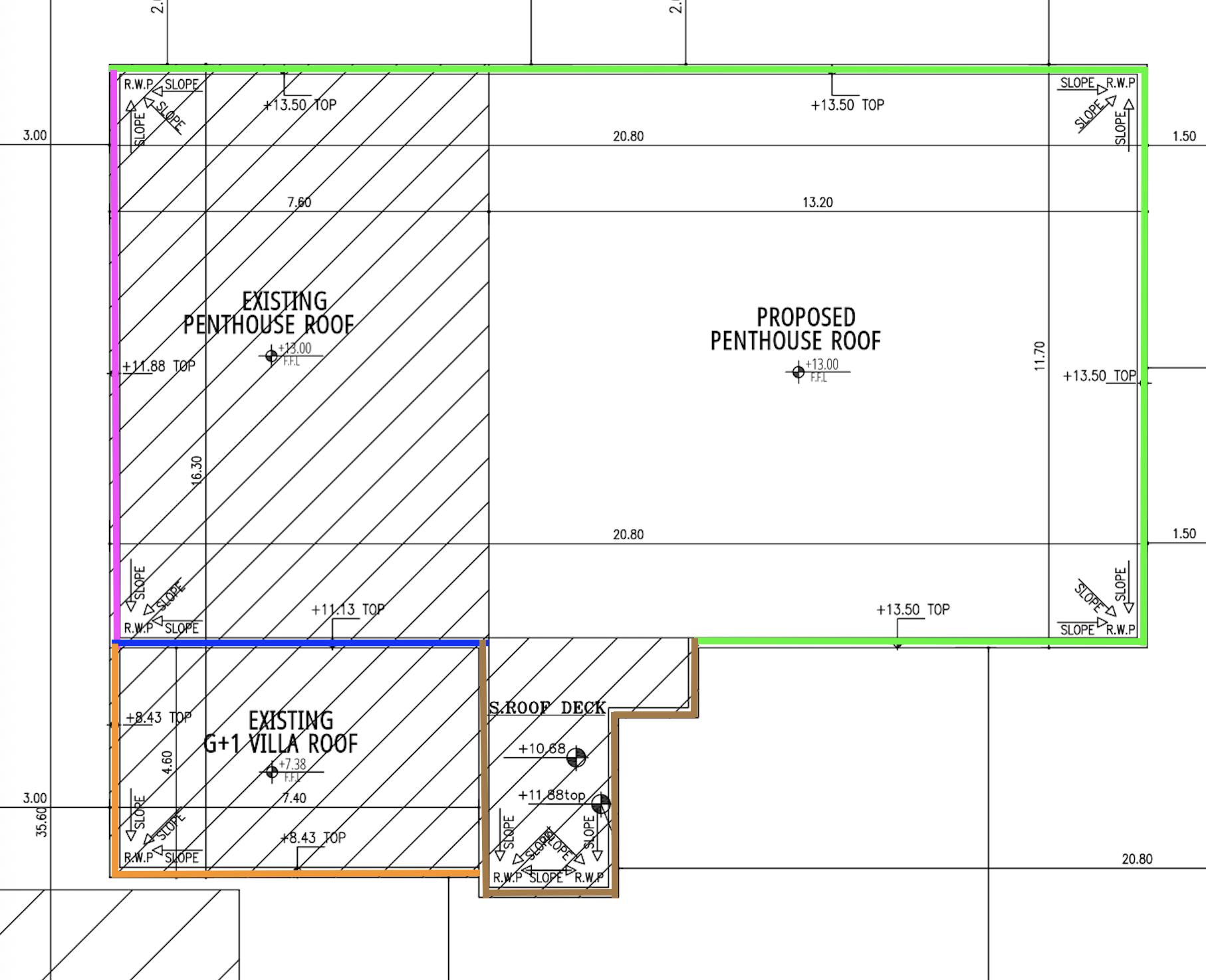

What are parapets?

Parapets are railings on the edge of roofs. They are typically required on accessible roofs for the safety of those on the roof.

.png)

How are parapets represented on plans?

Each color represents a single parapet. We know that there is a parapet because there is a “TOP” (top of parapet) marker on each side for which I drew a line. As you can see, for homes with accessible roofs, there are a lot of different parapets on the roof. However, most of these parapets are not on accessible segments of the roof, and are thus not relevant to extract. Specifically:

Note: The true height of the orange parapet is 1.05m, which is less than the minimum required height of 1.2m! We can determine this by reading the height of the finished floor level by observing the FFL marker (7.38m) and subtracting that from the top of parapet (TOP) height (8.43m)

There are several approaches one can take to extracting the height of the parapet surrounding the accessible segment of the roof.

The obvious one that comes to mind is naively using a state of the art large foundation model to achieve this. That would look something like this

import openai

from PIL import Image

import base64

def extract_parapet_height(image_path: str) -> float:

"""Extract parapet height using foundation model only."""

# Convert image to base64

with open(image_path, "rb") as f:

image_base64 = base64.b64encode(f.read()).decode()

prompt = """

Analyze this architectural site plan and extract the minimum parapet height.

Look for:

- "TOP" markings (top of parapet height)

- "FFL" markings (finished floor level)

- Calculate: Parapet Height = TOP - FFL

Return only the smallest parapet height found.

"""

response = openai.chat.completions.create(

model="gpt-4-vision-preview",

messages=[{

"role": "user",

"content": [

{"type": "text", "text": prompt},

{"type": "image_url", "image_url": {

"url": f"data:image/jpeg;base64,{image_base64}"

}}

]

}],

temperature=0

)

return parse_height_from_response(response.choices[0].message.content)We’ve evaluated this approach on hundreds of documents and found that multimodal foundation models, even state of the art models like GPT-5 and Gemini 2.5 Pro, struggle with this task and often return the height of the wrong parapet. Empirically, we found accuracy to be 60% when using only a foundation model because:

Another approach we considered is to train a small, task-specific computer vision model for this task end to end. Specifically, this model would take an image as input and directly output a number corresponding to the smallest height of the parapet on the accessible roof of the villa. To do this, we would have to do the following:

However, this approach would have the following challenges:

Due to these challenges, we decided to exhaust all other possible strategies before attempting this one.

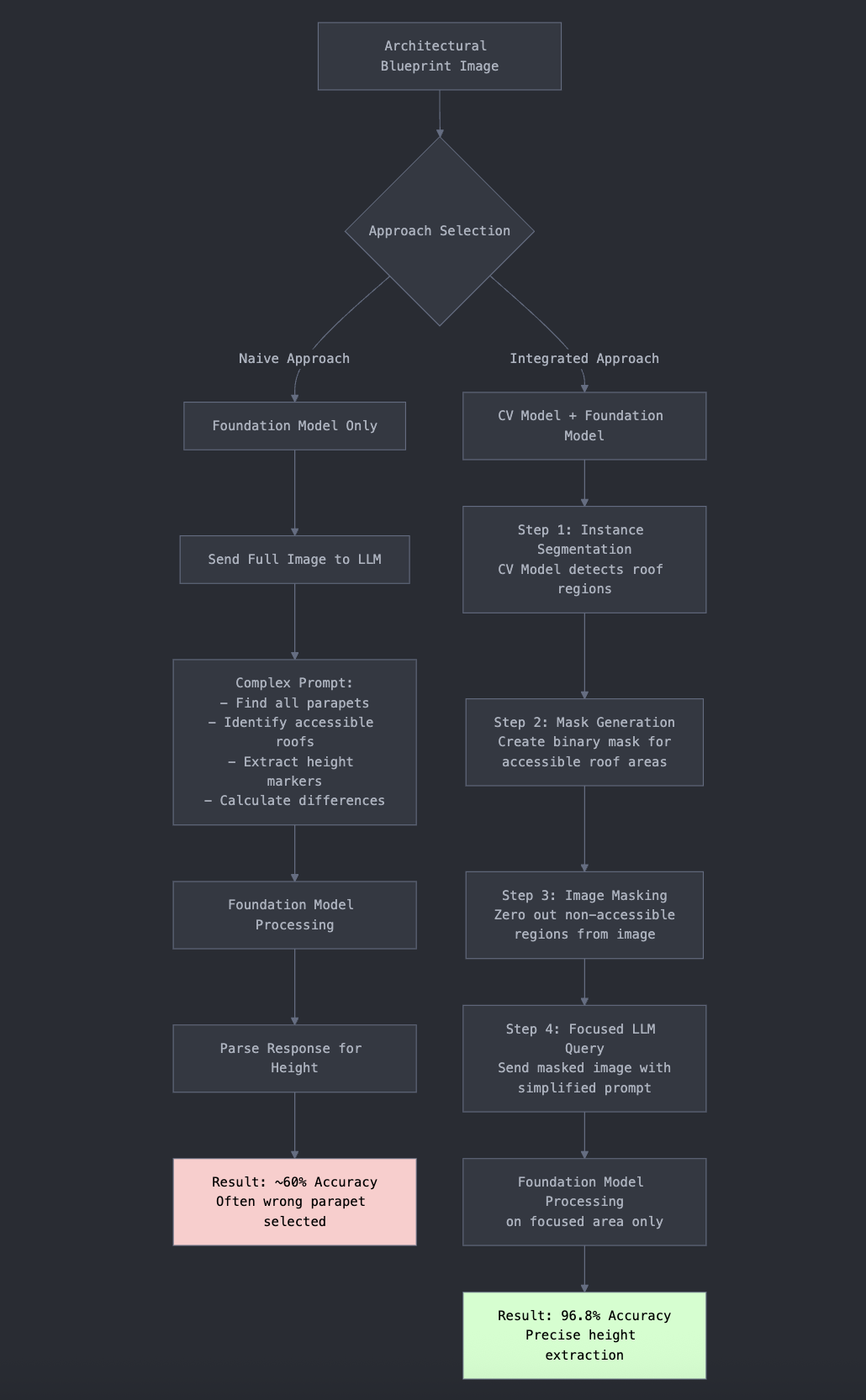

The approach we’ve ultimately found to be the most performant and thus deployed to production is to combine the strengths of task-specific computer vision models and foundation models.

The diagram below illustrates how our integrated approach differs from the naive foundation-model-only method:

As the diagram shows, our integrated pipeline uses a specialized computer vision model to first identify and isolate the accessible roof regions, then applies a foundation model only to the relevant masked areas. This dramatically improves accuracy by leveraging each model type for what it does best.

Specifically, we trained a small instance segmentation model to detect where the accessible roof was in the document, masked out the non-accessible parts of the roof from the image and simply used the foundation model to extract the height of the smallest parapet in the drawing.

.png)

Here is our code to run the small instance segmentation model to detect where the accessible roof is, use the model output to create a masked image like the one above, and then use foundation models to ultimately extract the minimum parapet height by reading the height markers drawn on the document:

from transformers import pipeline

import cv2

import numpy as np

class IntegratedBlueprintAnalyzer:

def __init__(self):

# Load segmentation model for roof detection

# This is a placeholder for a custom model

self.segmentation_model = pipeline(

"image-segmentation",

model="facebook/detr-resnet-50-panoptic"

)

def analyze_parapet_height(self, image_path: str) -> dict:

"""Main analysis pipeline combining CV and LLM."""

# Step 1: Load image

image = cv2.imread(image_path)

# Step 2: Detect accessible roof regions using CV model

roof_mask = self.detect_accessible_roof(image)

# Step 3: Mask out non-accessible areas

masked_image = self.apply_mask(image, roof_mask)

# Step 4: Use foundation model on focused image

parapet_height = self.query_llm_for_height(masked_image)

return {

"parapet_height": parapet_height,

"accessible_roof_area": np.sum(roof_mask),

"confidence": "high" if np.sum(roof_mask) > 1000 else "medium"

}

def detect_accessible_roof(self, image):

"""Use specialized CV model to find accessible roof regions."""

segments = self.segmentation_model(image)

# Create mask for roof-related segments

mask = np.zeros(image.shape[:2], dtype=np.uint8)

roof_keywords = ['roof', 'terrace', 'deck', 'accessible']

for segment in segments:

if any(keyword in segment['label'].lower() for keyword in roof_keywords):

segment_mask = np.array(segment['mask'])

mask = np.logical_or(mask, segment_mask).astype(np.uint8)

return mask

def apply_mask(self, image, mask):

"""Zero out non-accessible areas."""

masked_image = image.copy()

masked_image[mask == 0] = 0

return masked_image

def query_llm_for_height(self, masked_image):

"""Query foundation model on the masked/focused image."""

# Convert to base64 and send to LLM with focused prompt

# (Implementation similar to naive approach but on masked image)

pass

The solution we built does not require our ML stack to run in real time. This greatly simplifies our production serving stack, but there is still some complexity in how we serve model inference requests:

Blueprint Dataset:

By leveraging each class of models for their strengths and using them in a complementary fashion to address their weaknesses, we were able to achieve 97% accuracy on determining whether each attribute of a plan was compliant with building codes compared with only ~60% accuracy with the Naive approach.

Architectural blueprint analysis represents a broader challenge in technical document understanding: balancing precision requirements with contextual interpretation capabilities. Our systematic evaluation reveals that different AI approaches excel at fundamentally different aspects of this challenge.

Key Technical Insights:

The result is a framework for intelligent blueprint analysis that routes tasks to optimal AI approaches based on extraction requirements, delivering both the precision needed for quantitative analysis and the contextual understanding required for qualitative assessment.