Confidence Scoring with Bayesian Networks

Brain Co. uses a Bayesian Network approach to confidence scoring that enables full automation of the building permit approval process (Figure 1).

Member of Technical Staff @ Brain Co

Brain Co. uses a Bayesian Network approach to confidence scoring that enables full automation of the building permit approval process (Figure 1).

Member of Technical Staff @ Brain Co

The running joke inside Brain Co. is that if you want to extend your deck in the Bay Area, the permits will take 2 years. The first time this joke was told it was 6 months, and the duration has increased by a couple months every time it’s retold. [1] If you are a homeowner you already understand how painful this aspect of the American dream is, not just in America, but all over the world.

Using AI in construction permitting is straight forward:

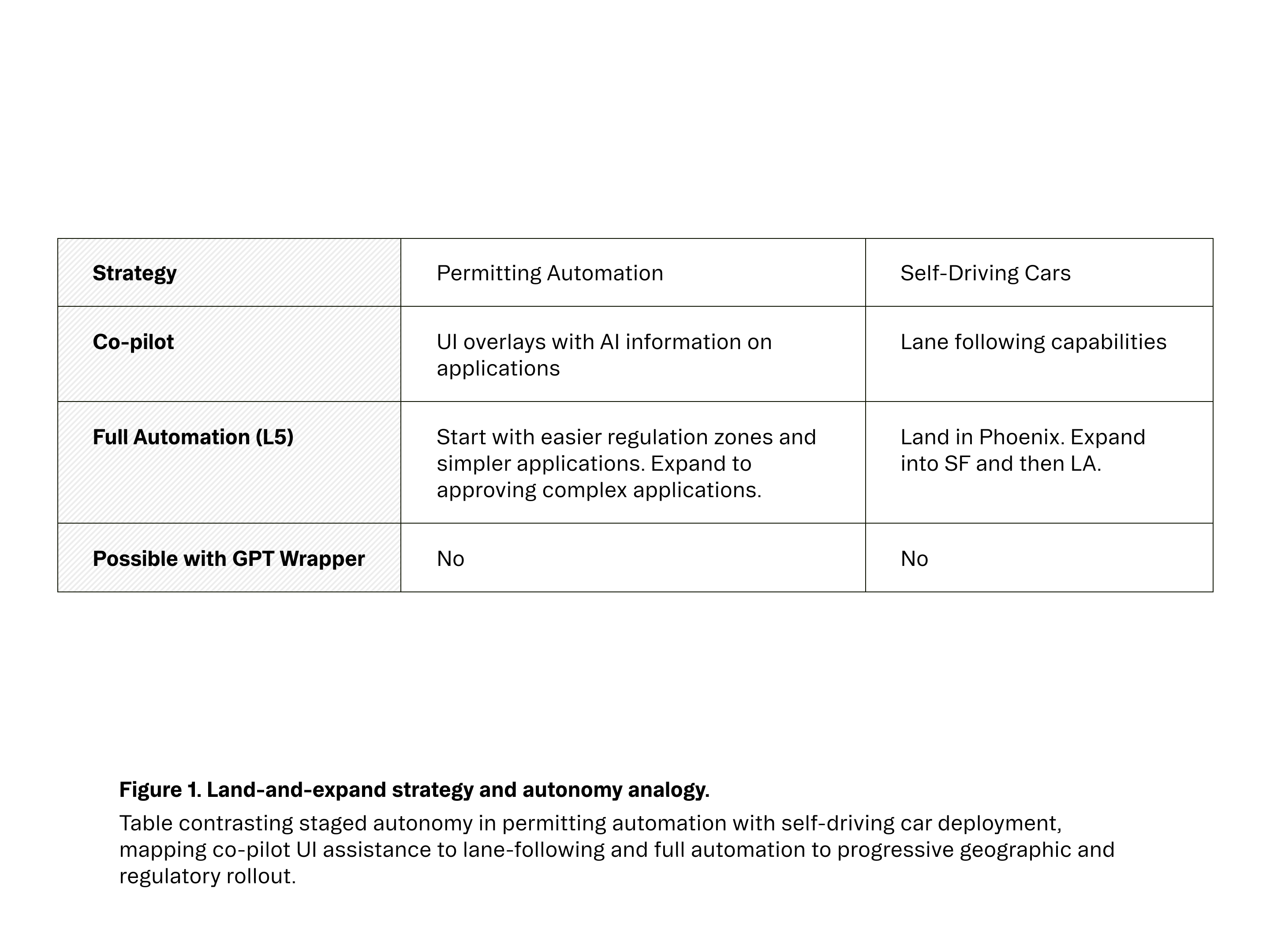

On the government side, we are actively developing a system that can fully automate the approval process. The problem space is complex, and so we are tackling the problem with a land-and-expand approach. As summarized in Figure 1, we follow a staged autonomy pathway, starting with co-pilot decision support and expanding toward full automation as confidence and reliability mature.

Permitting Automation comes with relatively high consequences and needs high accuracy and graceful degradation out of the gate. Unfortunately, Large Language Models (and neural networks) occasionally (frequently) make stuff up. A common approach for these complex problem spaces is building out additional systems to verify candidate solutions in parallel to the solution generation. From Retrieval-Augmented Generation to DeepMind’s OG AlphaCode, we see ML systems incorporate things like re-ranking, filtering, or validation. Confidence scoring can be similarly useful in enhancing the performance of systems that generate solutions, where we threshold out low confidence decisions. [2]

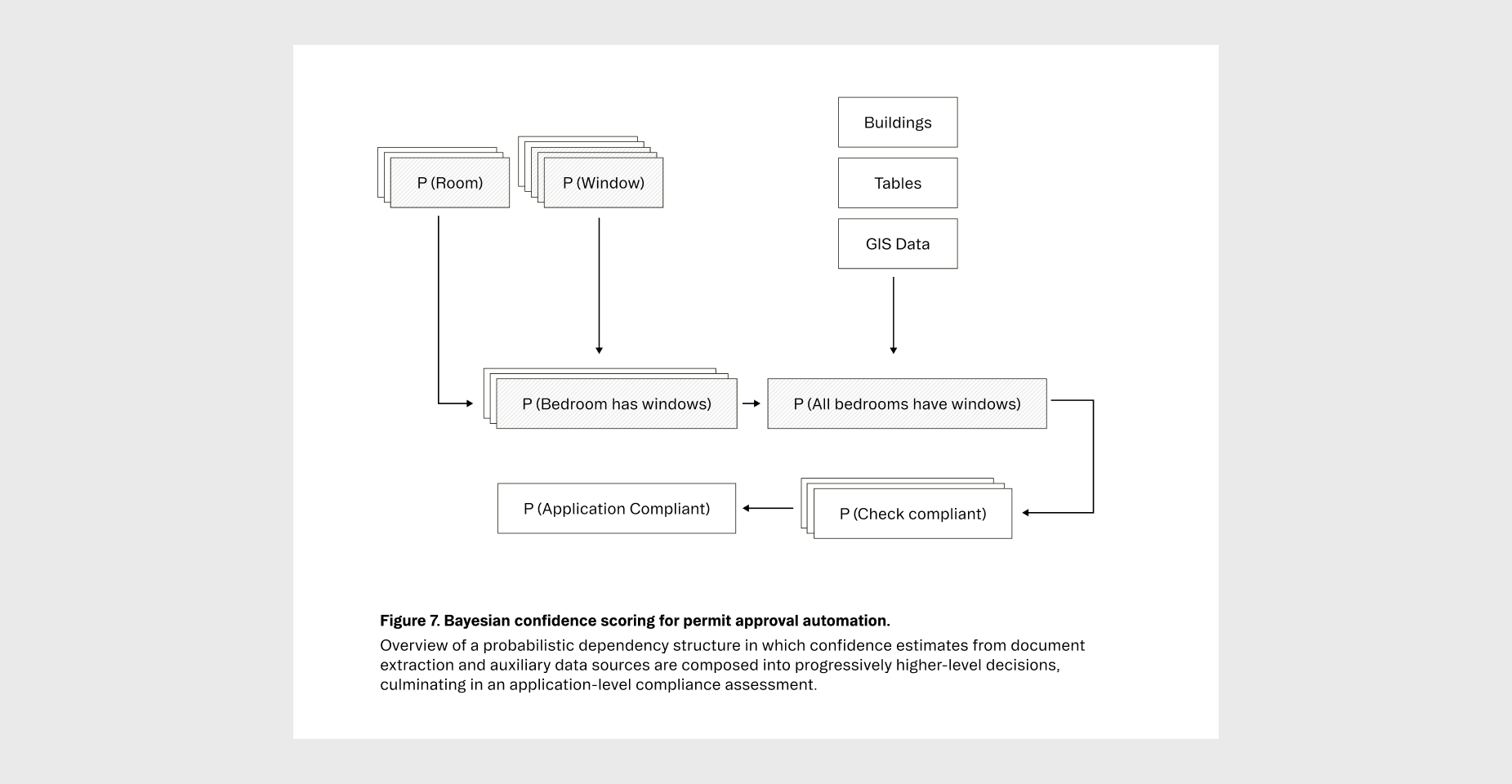

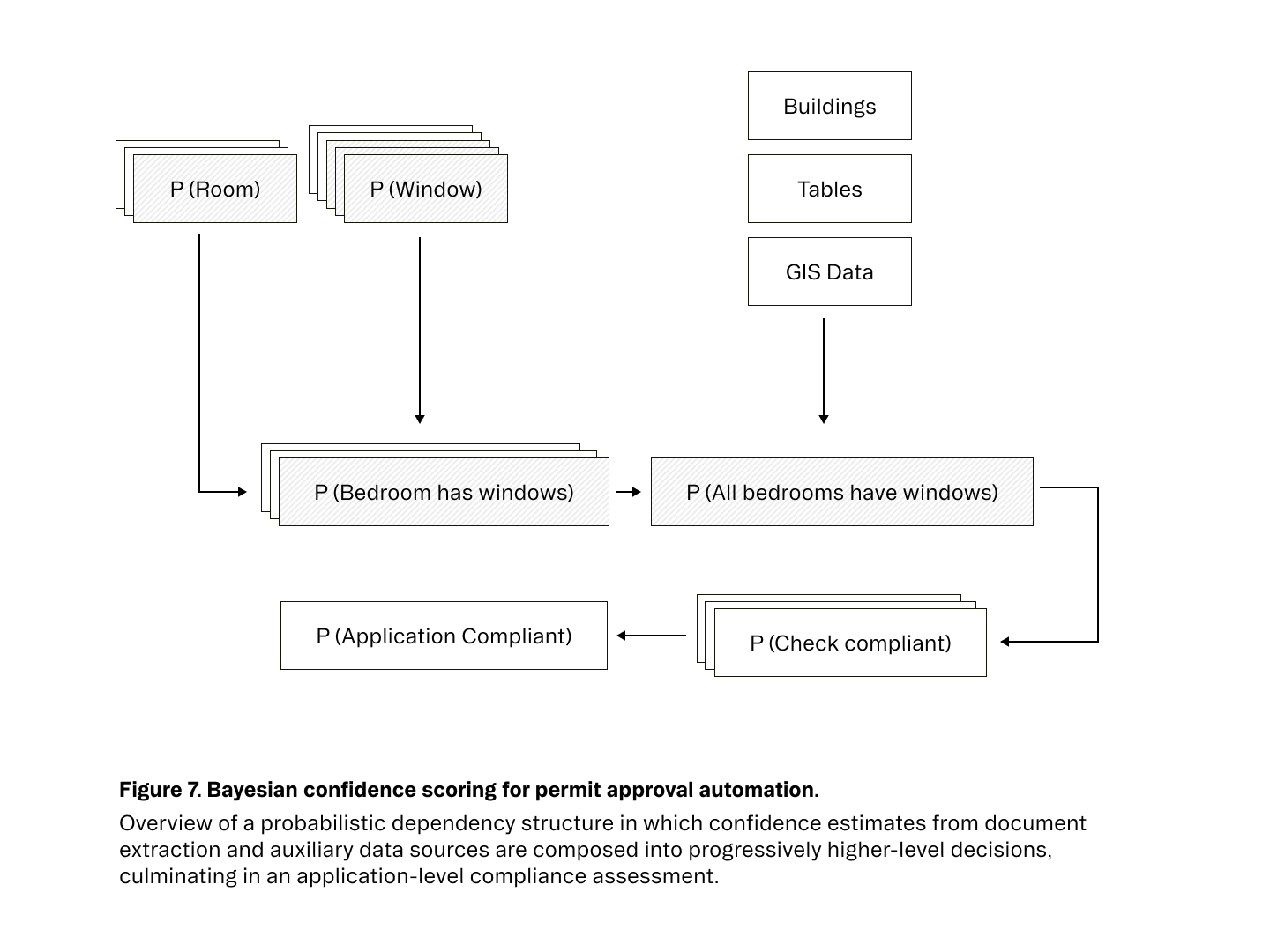

Our confidence scoring architecture operates at three hierarchical levels, each consuming outputs from the previous tier along with original inputs. Figure 2 uses a common zoning regulation, where bedrooms need a window, to illustrate this.

The extraction system is the core ML system that turns unstructured blueprints (images) into structured information (JSON) like windows and rooms. As seen in Figure 3, these then form the basis of the probabilities that:

SoTA vision models provide a confidence score that is a combination of existence as well as class confidence. These outputs, illustrated in Figure 4, are used in conjunction with additional signals to estimate confidence that an object is a bedroom or a window:

In the long run, we want to lean on any production data flywheels and signals as opposed to hand-crafted features.

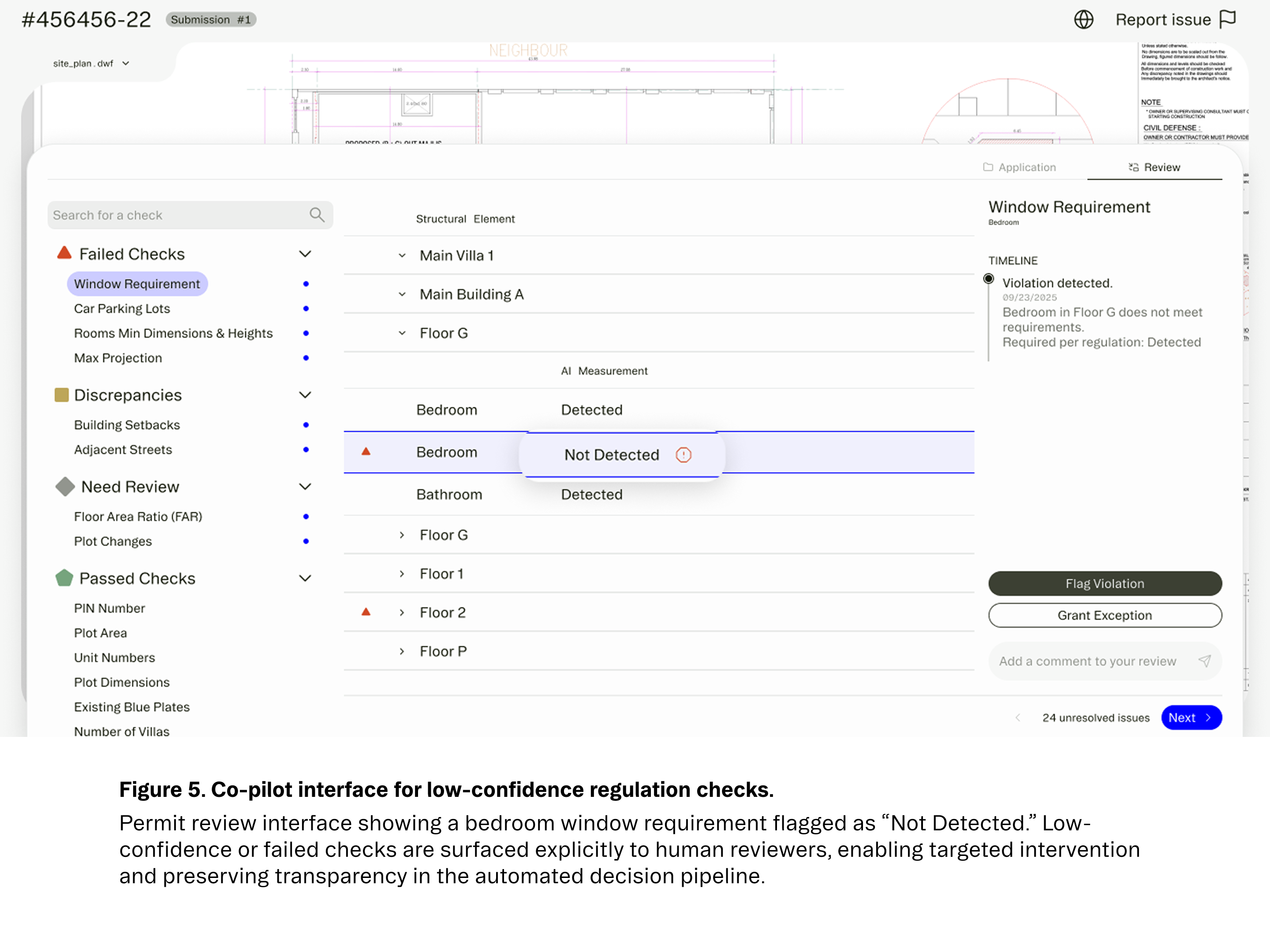

In our co-pilot application, lower-confidence checks are flagged for human attention, as shown in Figure 5. This level of transparency helps our AI systems build trust with end users by clearly communicating when automated decisions require review.

Value Confidence forms the input basis of the Bayesian system, along with other application properties. Examples include:

When all confidence scores for all regulation checks are high, we can mark the application as fully automatable, and giving our partners the option to completely remove humans from the loop (Figure 6).

Bringing these pieces together, we arrive at a unified confidence scoring system in which uncertainty is explicitly represented and propagated across the full permitting workflow. Value-level confidence from document extraction feeds check-level confidence for individual regulations, which in turn aggregates into an application-level confidence signal that governs automation decisions.

Figure 7 summarizes this hierarchical structure as a probabilistic dependency graph, making explicit how signals from documents and auxiliary data sources combine to support transparent, end-to-end automation.

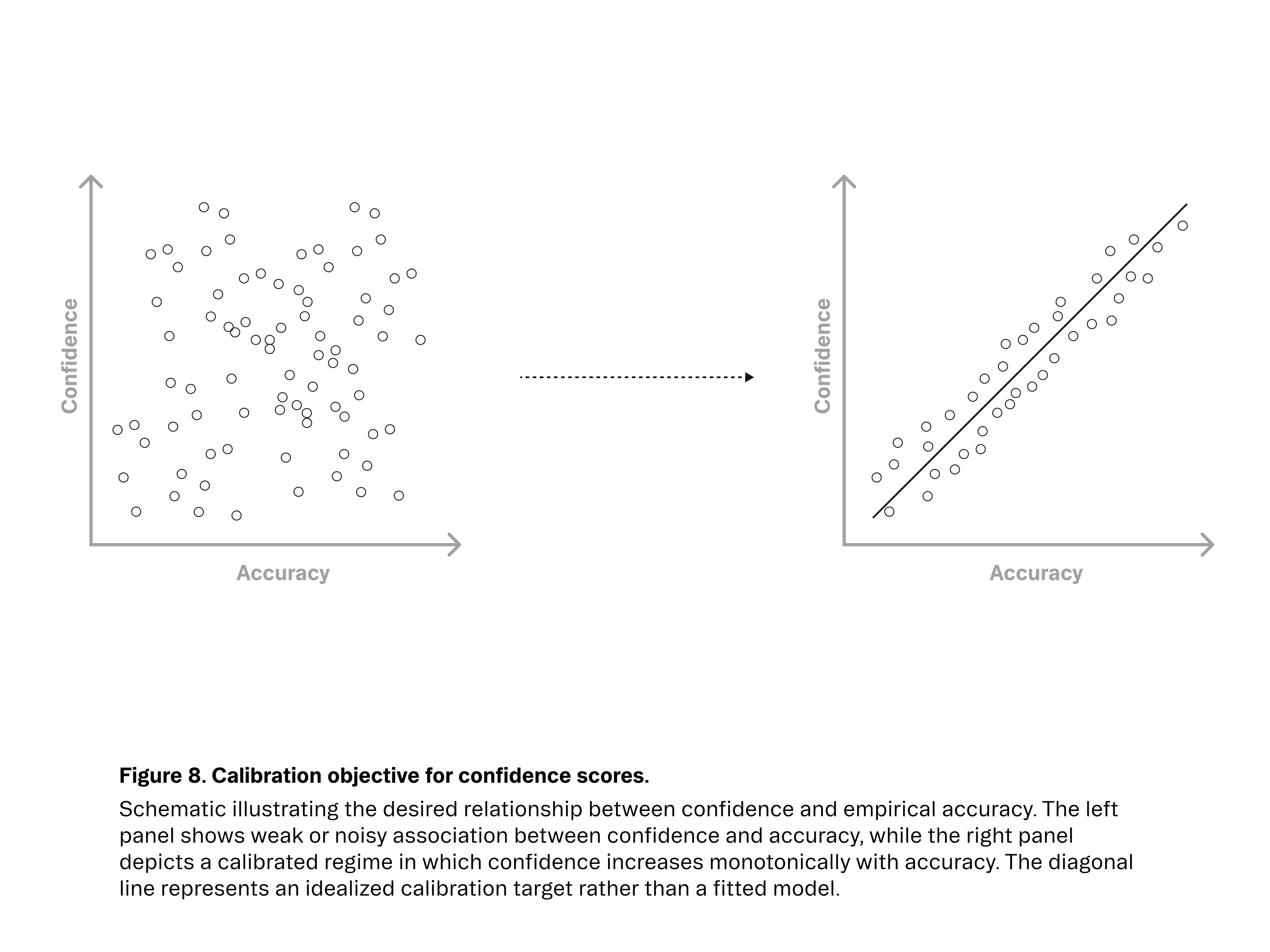

How do we know our confidence scores are any good? Confidence scores for the confidence scores? A useful system will generate high confidence scores when accuracy is high, and lower confidence scores when accuracy is lower. A natural starting place is using correlation metric like Pearson or Spearman. However, on certain values or regulations our ML system has very high accuracy across the board and there is little to zero accuracy variance. In these cases correlation as a metric breaks down. Figure 8 visualizes this calibration goal, highlighting the difference between weak correlation and a well-aligned confidence–accuracy relationship.

If confidence scores are constrained to map directly to accuracy, then error or loss scoring like Expected Calibration Error or RMSE variants can be used. In practice we relax this constraint and use a precision-blended scoring system to measure correlation when there is variance and accuracy when there is not.

Part of delivering value on the application level involves targeted inference/engineering strategies beyond just ML Evals. To process permits faster we aim to batch/parallelize as much of the computation as possible based on dependency flows. We also add caching for all levels of inference both for value extraction and confidence scoring. ML datasets also never start covering the long tail of production edge cases like empty applications, corrupted files, conflicting information, overlapping text. Handling these edge cases thoughtfully is often the difference between a demo and a product people actually trust.

Brain Co. partners with institutions in Government, Energy, and Healthcare, among others. If you are interested in deploying high-impact AI into society (and then writing promotional blog posts about it), we’d love to hear from you.